Is my SD card is resilient enough for production ESXi usage?

If you are trying to buy a new host? “No.”

If you have an existing install: It depends…

There is more to discuss here now that 7 Update 3 is out on where things are going:

A few points of clarification:

- The deprocation of SD/USB devices to be used as the sole boot and OS relate storage for ESXi was announced, but to be clear; This does NOT mean that support was pulled vSphere from 7 Update 3 for these configurations. I put this in bold because I’ve heard this misconception quite a few times.

- For people who are not in a position to upgrade their boot device, we will continue to support SD/USB boot for the 7.x release. I will caviot this with PLEASE upgrade to 7 Update 3 (or at least 7 U2c at a minimum) as a number of mitigations to lower the chances of premature device failure as some fixes have been applied.

What was fixed?

See this KB and the release notes here. Additionally, 7 Update 3 does a better job of making customers aware they are running in a degraded state where only a low endurance boot device exists for system usage. The limitations of using a RAM disk for redirection are noted below.

What are my paths forward? (Greenfield)

For net-new host purchases, I ask you to move away from USB/SD card boot devices. It will make life simpler, and the additive cost for a 128GB boot device vs a pair of larger capacity SD cards and the controller for them is less than you would think. For those that can, this also will work for brownfield.

What is my path forward brownfield

There are a few options.

- Replace the boot devices – Note this requires a reinstalation of ESXi. Configurations can be moved using various methods. To speed up this process you can use this KB to perform a backup and restore. Note you will need to restore the exact same ESXi build.

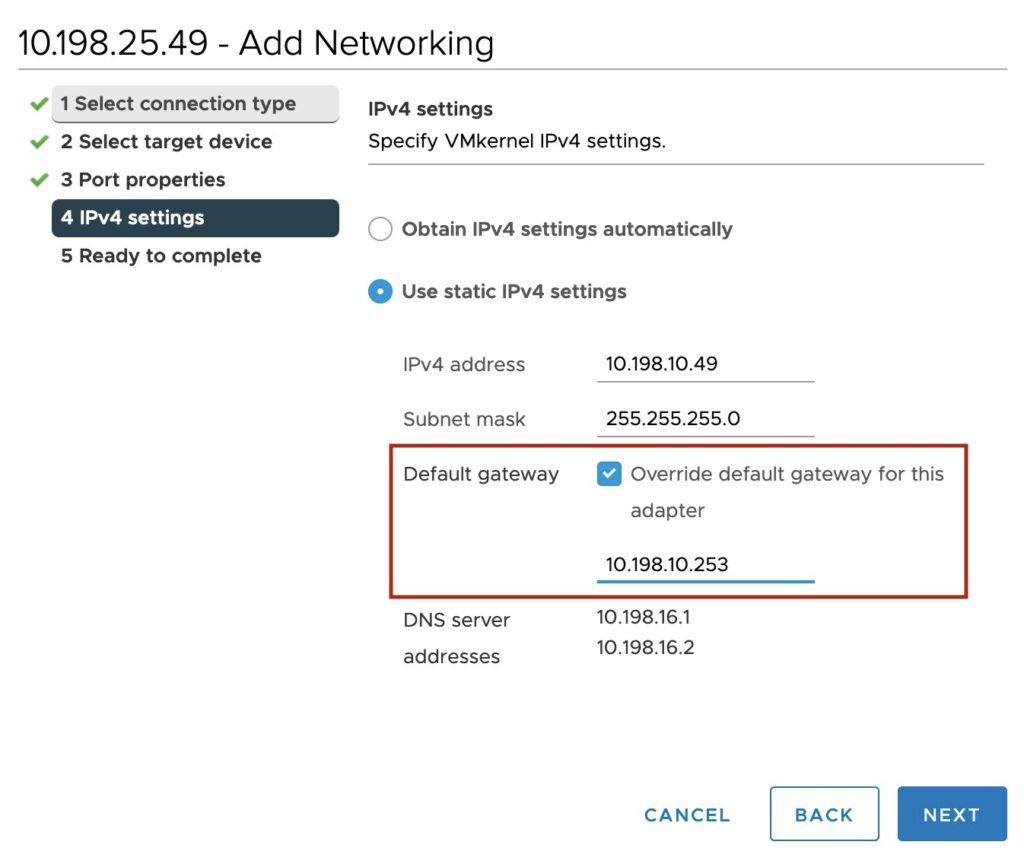

- Legacy configuration but still supported – This allows you to keep operating with the existing boot install on the device without having to perform a reinstall. This KB outlines a new boot flag that will automatically format a RAW (IE no partition tables) device that is 128GB or larger, and consume it for OSDATA usage. This will allow you to move forward with the existing install on SD/USB in a supported manner. Simple adding a properly sized M.2 SSD to your host and using the autoPartition=TRUE boot flag should create and redirect the necessary bits to keep running in a non-degraded or deprocated configuration. Note this configuration will be supported on future releases, but given the added complexity/cost vs. just using a proper boot device to begin with, is not something I recomend for greenfield (Hence why it’s called Legacy/supported).

- AutoDeploy – I will ask that for forward compatability support of new features I would start moving in the direction of Stateful Installs for Autodeploy.

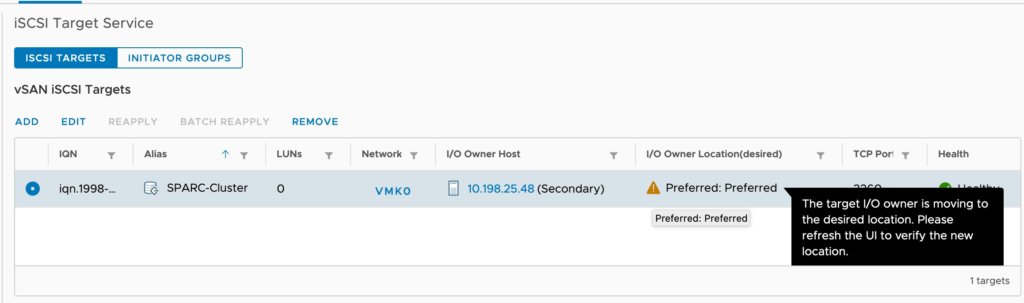

- Boot from SAN – Keep on rocking, just make those LUNs a bit larger please. VMware wants to see 32GB at a minimum.

What is this warning about Degraded Mode?

Degraded mode is a state where logs and state might not be persistent (get lost when the host is rebooted), with a side effect that it can cause boot up to be slower.

The /scratch partition will be created on a RAMDisk under a /tmp folder with a limited space of 250 MB. This is not recommended, and it will impact the ESXi host performance once /tmp runs out of capacity.

Why is this bad? Why Prefer local storage for logging?

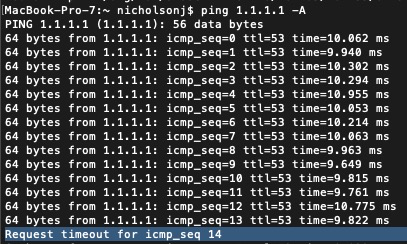

There’s a lot of advantages to redirecting locally. Consistency of performance as well as the ability to collect logs on issues that impact the availability of the storage network or HBA (for Example the NIC or FC HBA firmware crashing). Note Boot from SAN is still completely an option here, but this is (by virtue of physics) and advantage for a local quality device is that it will always be in a superior position to collect logs in specific situations.

How do I move my install to a new device?

See this KB

Couldn’t a Big RAMDISK just fix this?

Ehhhh, this isn’t a long-term solution. See the bottom of this KB for this discussion. Beyond the cost of RAM the bigger issue is volatility. 99% of customers I talk to want support and engineering to be able to identify the source of problems and this becomes incredibly hard when all logs and crash dumps are destroyed on host restart.

What about NVMe SD cards (SDExpress)?

This is something I’ve honestly asked engineering PM about. They are shipping in small quantities right now. My biggest concern looking at the hardware itself is thermal throttling causing complete yoyo’s on consistent performance. Logs and crash dump they look alright but future demands on the OSDATA may require more performance This is partly why vSphere 7 at GA requiring higher endurance and performance requirements for boot devices as preparation for future demands. Technically they will look like a NVMe device so I assume at least for home lab usage they should work. If anyone has any samples laying around and wants to test them shoot me a message on twitter (@Lost_Signal).

I have a home lab, and I”m out of drive bays and curious on cheap/low cost non-supported options?

Personally, I went and bought a $12 PCI-E to M.2 (SATA) adapter. They also make NVMe compatible brackets Just make sure the bracket you get supports your drive type. No need, to spend hundreds of dollars upgrading your hosts in the lab.

Where can I find this information on an official VMware.com page?

https://core.vmware.com/resource/esxi-system-storage-faq

Why did this blogpost just say “No” before.

The challenge in giving nuanced guidance is people tend to read “It’s supported” and ignore the rest of the sentence of why something is a bad idea. Given the blog post explaining this, KBs, and changes in u2c and U3 were still in the works I wanted for people looking to buy a new host to get a no-nonsense response in hardware selection.