How to pick NICs for VMware vSAN powered HCI

This post has been a long time coming, as Network Interface Cards are an often overlooked component. Many people have mistakenly assumed all NIC’s are the same or are simple commodities. Note, that most of this advice will also apply as generic advise for vSphere, and ethernet storage. Here is a short list of features and considerations when picking a network interface card. I’m hoping this series will spawn out into more information on picking switches, as well as troubleshooting of NICs and switches.

Offload Features

LRO/LSO – Large Receive Offload, and Large Send Offload allow for packets to be broken up when transmitting and consolidated. Note: TCP segmentation offload (TSO) is a very common form of LSO and you will often see these terms used interchangeably. This provides improvements in CPU overhead. The VMware Performance Team has a great blog showcasing what this looks like for virtual machines. LRO can benefit CPU overhead on 64KB workloads by as much as 90%.

Receive Side Scaling (RSS) – Helps distributed load across multiple CPU cores. At higher throughput operations it is possible that a single CPU thread can not fully saturate larger network interfaces. In sample testing, a 40Gbps NIC could only use 15Gbps when using a single core. RSS is also critical for VxLAN/NSX performance. Note RSSv2 is supported by a limited subset of cards (This appears to allow balancing at a more granular level).

Geneve/VxLAN encapsulation support – For customers using NSX, hardware support for overlays again helps increase performance and should be added to the shopping list when selecting a NIC.

Converged over Ethernet (RCoEv1/RCoEv2) – While vSAN doesn’t yet, support RDMA, vSphere VM’s, iSER (iSCSI RDMA Extensions) support is shipping today, and hopefully in the future additional support will come for other traffic classes. RDMA significantly lowers latency, lowers CPU usage and increases throughput while keeping CPU usage down. Note, RCoE avoids the use of TCP, while iWARP (a competing standard) runs RDMA over TCP.

NSX-T Virtual Distributed Switch (N-VDS). NICs that support this will feature “N-VDS Enhanced Data Path” support in the vSphere VCG. This includes the ability for Traffic flow over NUMA aware Enhanced Data Path. While this is normally something reserved for NFV workloads, the gains in throughput are massive. This Blog is a good starting point.

MISC CNA/Storage Options.

iSCSI HBAs have come in and out of vogue over the years. In general, I have some concerns with the quality of the QA the vendors are doing for this feature that sees such little usage now that the software initiator is the overwhelming majority of the market. FCoE has been removed/deprecated from some Intel NICs and in general, is falling out out favor. A software

FCoE software initiator now exists, but in general, the fad of FCoE (Which was always a bridge technology) seems to be slowly going away. For those still using FCoE CNA’s be sure to make sure that

NVMe over Fabrics (NVMe-oF) – Support for NVMe over ethernet fabric is slowing gaining interest in customers looking for the lowest latency possible. While possible to run over Fibre Channel, 100Gbps RDMA Ethernet is also a promising option.

Other Conisderations

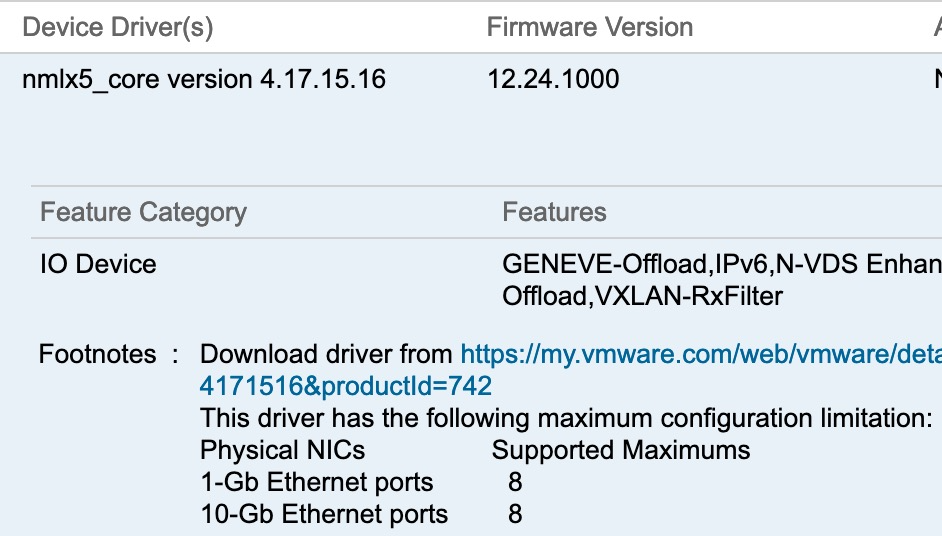

Supported Maximums – Not a huge issue for most, but some NIC’s have caveats on the maximum supported number that can be placed in a host. This is often tied to driver memory allocations. This information will be found in the vSphere maximums as well as in the notes on the VCG entry for a NIC.

Link Layer Discovery Protocol LLDP – Technically normally a software feature, but on some NIC families this is bizarrely made a hardware feature. This can have…. interesting results depending on the implementation.

Stable/Fast Driver Firmware and engineers to maintain this – I’m not sure why I have to list this as a “NIC feature” but it is. There are NIC’s out there that have had hilariously unstable firmware for years (Note this isn’t a vSphere issue, but a general issue across OS platforms). Some vendors will take an issue and rapidly RCA it in their testing lab. Others will ask you to be a crash test dummy and run debug drivers in production “until it happens again” Questions to ask an OEM are “If we have an issue do you have the hardware to recreate this, and were physical? is this lab?”. If you are repeatedly being asked to run async drivers (that are not tested/validated by VMware) this may be a sign that this vendor doesn’t have adequate engineering behind this card.

Flow control – This is something you really only should be using on 1Gbps, otherwise turn it off. It’s not a problem, and there are frankly are better ways (CoS/DSCP) to prioritize traffic under contention.

Management APIs for CIM Provider and OCSD/OCBB Support – This can allow for better out of band monitoring of this NIC. If there are not good ways to

Wake on LAN – Really you should be waking servers using the out of band management, but

So what do these features mean for a HCI Architect?

Lower host CPU usage means more CPU available for processing storage and running virtual machines enabling increased virtualization consolidation.

Higher Throughput per core (as a result of LRO/TSO) means that higher performance per core can be achieved by reducing uncessary CPU usage. This allows faster resync operations (commonly 64KB), as well as higher throughput available. LRO/TSO/RSS help prevents single-threaded networking processes from becoming bottlenecks.

Lower Packets Per Second (PPS) – By consolidating packets with TSO, fewer packets must transverse the physical switches. Many Switch ASICs will have limits as to how many PPS they can process and will be forced to delay packets negatively impacting performance.

Caveat Emptor – Some NIC’s have had a troubled history with these features, and may require driver/firmware updates to make stable. Some vendors may label a feature as offload, but in reality still, process them in CPU. Some features may only be supported in specific driver versions, or might even be quietly deprecated and scrubbed from the datasheets. Note, the

Feedback – Did I get something wrong? Did I not properly