vSAN 7 Update 1 What (Else) is new – iSCSI Stretched Cluster Support

Let me preference this discussion of iSCSI with my own personal opinion about iSCSI in the year 2020. With the support of shared VMDKs for SCSI-3PR applications, NFS and SMB shares, the need for iSCSI has reduced quite a bit. If you are using iSCSI today I’d like to talk about some alternatives to delivering that shared access or external cluster storage requirement. That said, I know there are still some uses cases for it so let us go deeper on this topic.

Previously iSCSI on vSAN was only supported with stretched clusters by a limited RPQ. Why was this?

Normally vSAN Stretched clusters implement a site locality construct to avoid unnecessary inter-site latency being added to the read IO path (They prefer all reads from the local site). The challenge came from the fact that the iSCSI service had no awareness of the two fault domains, and you easily get in a situation where an iSCSI target would be placed on the secondary site, while serving IO to virtual machines on the first site. As a result it would be possible for data at the preferred site where a virtual machine is being served to be sent to an iSCSI target on the remote site, and then come back as an iSCSI packet to a virtual machine running at the preferred site.

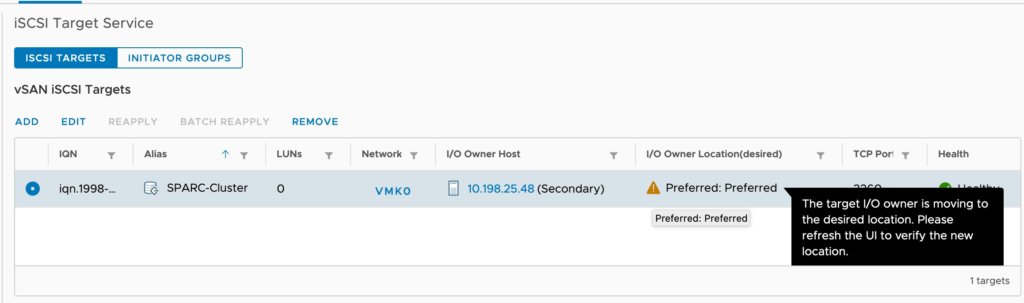

To prevent this problem, vSAN 7 Update 1 now supports setting a preferred site for an iSCSI target to live. Note, in the event of a complete site failure, the preference will be discarded and the service will cleanly fail over to the other side of the stretched cluster. This combined with other networking improvements and performance optimizations I mentioned in this blog, should help round out this new use case.

It is possible to relocated the preferred location. You will also receive a warning if something has caused a target to run at the non-preferred location.

Again, when clustering windows applications i tend to prefer the native VMDKs these days, but for those of you using iSCSI today (or already under RPQ) this may be a useful setting to look at.